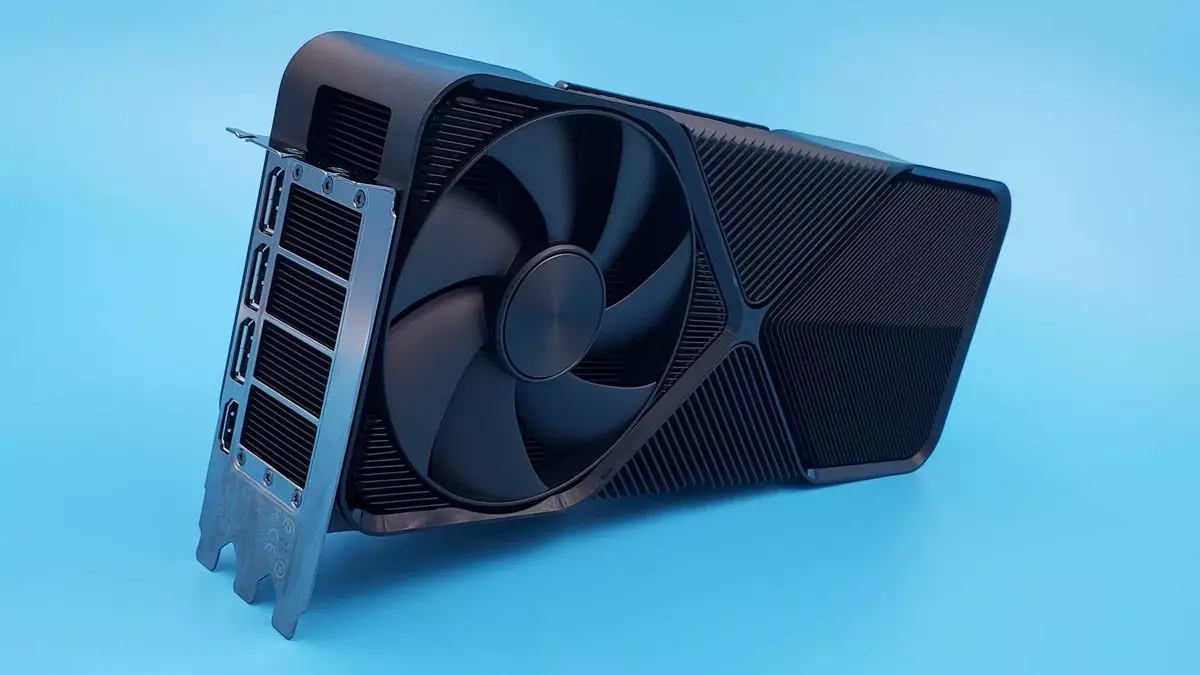

The landscape of video game graphics is on the brink of a renaissance, driven by revolutionary advancements in artificial intelligence (AI) and machine learning technologies. As the industry anticipates the unveiling of Nvidia’s upcoming RTX 5090 GPU, there are exciting prospects on the horizon: a paradigm shift towards AI-driven rendering systems. This article explores the implications of such groundbreaking technologies, the anticipated Blackwell architecture, and the potential future of gaming graphics as we know them.

As we inch closer to the CES 2025 showcase, professional discussions around Nvidia’s Blackwell architecture are gaining momentum. Although there is a tendency to throw around terms and speculations in the tech community, the consistent chatter about AI-integrated GPU developments is too substantial to disregard. Blackwell is expected to host features that could enhance GPU performance while significantly improving in-game graphics through advanced AI techniques heavily inspired by neural networks. The prospect of games moving away from traditional raster graphics—sculpted through conventional 3D pipelines—to fully AI-rendered environments may soon become a reality.

Reports originating from various GPU manufacturers hint at a new class of graphics cards that heavily employ AI technologies like Deep Learning Super Sampling (DLSS) and enhanced ray tracing capabilities. The suggestions indicate that these GPUs will utilize the burgeoning field of neural rendering, which meticulously crafts images at unprecedented speeds and quality, leveraging the predictive capabilities inherent in AI algorithms.

Neural Rendering: Revolutionizing Game Graphics

Nvidia’s focus on neural rendering heralds a transformative era for graphics processing. Rather than relying solely on repetitive 3D modeling that can be labor-intensive, neural rendering promises to recalibrate the rendering engine to function like an intelligent system that can assess, predict, and generate graphics in real-time. Current capabilities, such as DLSS, already employ AI to interpolate frames and upscale lower-resolution images, but the goal of neural rendering extends beyond the current state of affairs.

By harnessing neural networks, games could eventually be rendered with minimal human intervention. Picture a scenario where an AI engine autonomously interprets data about a scene—objects, movements, and other intricate environmental factors—while producing visually stunning graphics millisecond by millisecond. The ramifications of such technology stretch far beyond enhanced visuals; they open a discourse on game design, development processes, and player interaction.

Benefits of AI in Gaming and Content Creation

AI’s advent doesn’t merely expand the graphical fidelity within gaming; it also enhances content creation workflows significantly. Improved AI-driven upscaling methods enhance video content quality, shifting the power dynamics in video production and game development. Aside from fostering a rich gaming experience, these advances would prove invaluable to content creators aiming for high-quality results without disproportionately labor-intensive efforts.

The generative aspects of AI come into play as well. By optimizing tasks traditionally requiring substantial human time and effort, content creators can focus more on the conceptual and creative dimensions while allowing powerful AI systems to handle the minutiae. This convergence of AI with creative industries suggests a future where collaborative efforts between humans and AI fuel the creation of exceptionally complex virtual environments.

Evolving Beyond the Hype: What’s Realistic?

Despite the optimism surrounding neural rendering and AI-driven graphic capabilities, skepticism remains valid. The idea that every pixel in a game will be rendered by AI alone might be overly ambitious. Nvidia’s intention appears not to propose a fully AI-rendered system but to delegate specific elements of the rendering pipeline to AI, enhancing workflow efficiency while maintaining quality.

New AI techniques, like Real-Time Neural Radiance Caching for Path Tracing, represent a step forward in gaming graphics that can minimize noise while optimizing path tracing calculations. Such innovations would fuel current rendering methods rather than replace them entirely, effectively demonstrating an evolution rather than a revolution.

As Nvidia aligns its future GPU designs with AI technologies, the implications for gamers and developers alike could be profound. The evolution of GPU capabilities potentially manifests itself in more than just aesthetics; substantial improvements in rendering speed, efficiency, and performance could transform the gaming experience.

The transition towards deeper integration of AI portrays a larger narrative: the gaming industry is shifting into an era based on AI intelligence. While it’d be prudent to temper expectations about the sheer extent of neural rendering’s impact, the landscape is undoubtedly ripe for innovation. As we await the RTX 50-series launch, one thing is certain—the future of gaming graphics looks incredibly bright, marked by the powerful synergy of technology and creativity.